You Should Respond to Negative Steam Reviews

Looking at the efficacy of Dev Responses to User Reviews on Steam

Part 3 of a series started here.

Intro:

Every once in a while a game gets lower reviews than expected. It sucks. The team has invested 3-5 years into a great game idea and some critical bug you missed in testing tanks the global user score. It’s an emotional gut punch and the reality is that it’s a weight that will forever be associated with your team.

As stated in my post on Cyberpunk 2077, Steam user reviews have a direct impact on visibility and revenue so even the most corporate of suits should care about them, especially if you are on the bubble of “Mixed” or “Mostly Positive”.

Every game that has clawed its way back up from review purgatory has done so primarily on the strength of new reviews. Just 12.7% of Steam user reviews are ever updated, so the first step to fixing reviews should be ensuring that new users are having a good experience.

But I want to focus specifically on whether there is something we can do with existing negative reviews. Steam offers a way for developers to respond directly to user reviews but very few actually do. Less than 0.5% of Steam user reviews have a response from the developer and half of those are are concentrated in just 79 games.

Key Takeaways:

Developer responses to negative reviews on Steam raise them by an average of ~12.1%. It’s worth your time. Gamers want to feel heard and this is an effective tool.

Developer responses to positive reviews also have a slight positive impact on reviews. More data is needed to say this conclusively.

These are Steam user reviews. Serious business but don’t take them too seriously

Methodology

There is some fuzzy math here so I want to explain exactly what I’m doing. Steam’s review API provides one record for each user review. That record includes timestamps for “review creation”, “latest review update”, and “developer response” along with whether the review is currently positive or negative. Unfortunately, that means that unless we are scraping a review multiple times over the course of several months, we can’t look at whether a given review has flipped, we can only infer based on surrounding variables. In this case, I’m doing a few things to estimate pre-update responses:

AI text analysis from Developer responses.

Grouping reviews that have received developer responses by title and relative review update timing to see what types of reviews a developer is responding to

By example, if the timestamp for “review update” is prior to “developer response”, we know the user has not updated their review since the developer responded. If we look at all the reviews a developer has responded to that have not been updated since that response and find that they are all negative, we can infer the developer is primarily responding to negative reviews.

The Averages

The vast majority of reviews (99.5%+) are in the blue “No Dev Response” category but fortunately we have 100M reviews to look at so there are still over 450k “Dev Responded” reviews to work with

Blue: Reviews that have been updated by reviewers are 10.4% more negative. Because we don’t know whether the initial reviews were positive or negative, we can’t rule out the idea that users who update reviews simply leave lower review scores. I looked at games that have been out for a short amount of time (<3mo) and the gap is much smaller. It is likely that reviews trend negative over time.

Red: Devs tend to respond to negative reviews. Similar to the blue bars, reviews that have received an update prior to a developers response but not since, are 8.6% lower than un-updated reviews (dropping from 36.0% to 27.4%). Reviews that are updated AFTER a developer responded though, are 63.5% positive! That’s a huge spike in positivity (36.1%) and while there are plenty of other reasons it could be higher, this is strong evidence that it had a significant positive impact.

By Title:

Next, lets look at this at a title level and put the reviews the devs have responded to in two groups, “reviews that were updated after the developer responded” and “reviews that were not updated after the developer responded”. We are then looking at each groups respective positivity rate. The idea here is to try and gauge how positive the reviews are that the developer is responding to versus how positive the reviews are if they get an update from the user.

I’m filtering the graphic for just games that have 250 or more gamers that updated their review post-developer response. Good news for us, developers respond to different sorts of reviews. Some, respond to mostly positive reviews, some exclusively respond to negative reviews, most are a mix.

Thanking positive reviewers, like what we see with Gordian Quest, seems to have a small benefit on game reviews if we assume our baseline of 10.4% review decline post-update.

Developer responses to negative reviews seem to have a significant impact. In the case of Forza Horizon 4, 80% of negative reviews the team responded to that were then updated by users turned positive. Several other games like DOOM Eternal, Gunfire Reborn, Space Marine 2, and Elite Dangerous saw 60%+ improvements.

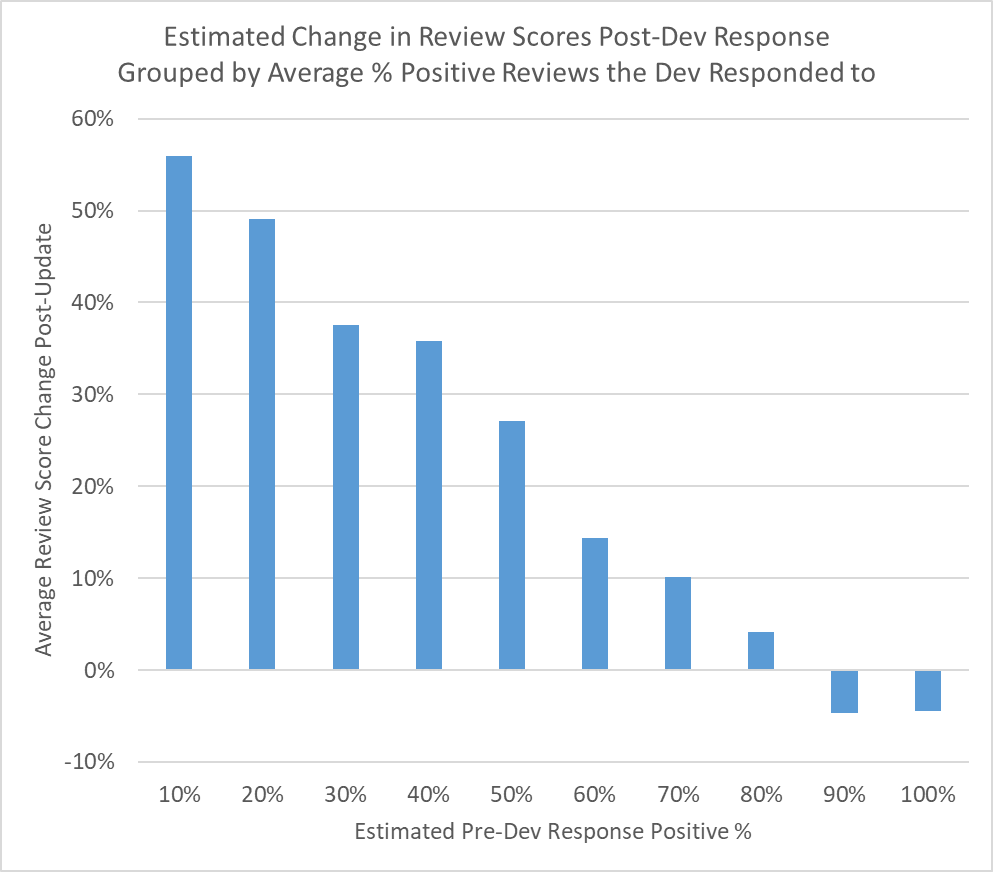

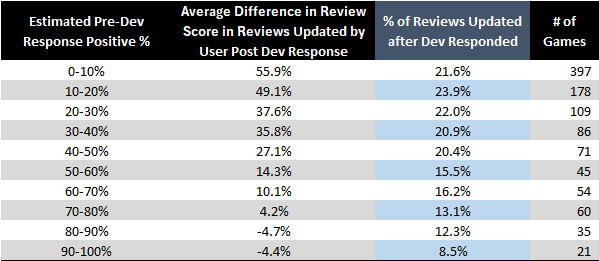

Aggregating by Dev Response Strategy

So the next step was to aggregate all the games based on what sort of reviews the developer was responding to. Here are the steps:

Filter for titles with at least 50 developer responses (about 1k games) that resulted in a review update.

Note: Developer responses in smaller games are more likely to get updated user reviews and those reviews tend to be more positive so this likely understates impact.

Group games by the relative percentage of positive reviews in the “User did not Update after Dev Response” column. I’m going to call this “Estimated Pre-Dev Response Positive %”

Group the second step by deciles

The goal is to get an average of impact based on different developer response strategies and hopefully tease out the trend we saw above on the impact of responding to negative reviews.

And as a chart:

To make sure the point is clear, the chart has three columns:

The range of estimated percentage of positive reviews that the developer responded to.

Example, 0-10% group is developers that responded to all or mostly negative reviews.

The change in review score calculated as all reviews that HAVE received an update since the developer responded vs all reviews that HAVE NOT received an updated review since the developer responded

The number of games in a particular group

When we aggregate this way, the trend is clear. When devs respond to mostly negative reviews, they see an a average improvement of 55.9%. Put another way, half of players that leave negative reviews but then come back and update their review after a developer has directly responded to them, change to positive.

It also looks like a handful of reviewers came back and updated their review to be negative after a developer has thanked them for their positive review. That could be a limitation of the method we are using. If it is real, it is still better than the typical review decline of 10% so it’s a win, but it still sort of feels like a loss.

Update Rate

The other key number we needed to evaluate the efficacy of responding to reviews is the percentage of reviews that are actually updated after a developer responds. It turns out that a developer response does increase the chance of an update.

Reviews that have developer responses are 2x as likely to be updated. There are a few ways to read that but I think there’s enough surrounding evidence for us to assume that a developer response increases the chances a user will update their review.

If we filter the review rate to only reviews that are updated AFTER the developer responds and add it to our graph from earlier, we get column 3 in the below chart (highlighted in blue).

Key takeaways:

As mentioned before, reviews are much more likely to be updated if a developer has responded.

Players that leave negative reviews appear to be much more likely to update (21.6%) than positive reviewers (8.5%).

The Value

Let’s assume that the developer is fixing bugs and responding to reviews when appropriate for this section. If we assume that the developer responds to all negative reviews we should expect a little over 12% of them will change their review to positive. If you consider that the general trend for “updated reviews” is around a 8-10% decline, this could be a ~20% improvement over doing nothing.

If you are on the bubble of one of the review buckets like “Very Positive” or “Mixed”, this is a powerful tool that could be used to get you the small boost you need.